Distillation-free Text-to-3D via Guided Multi-view Diffusion

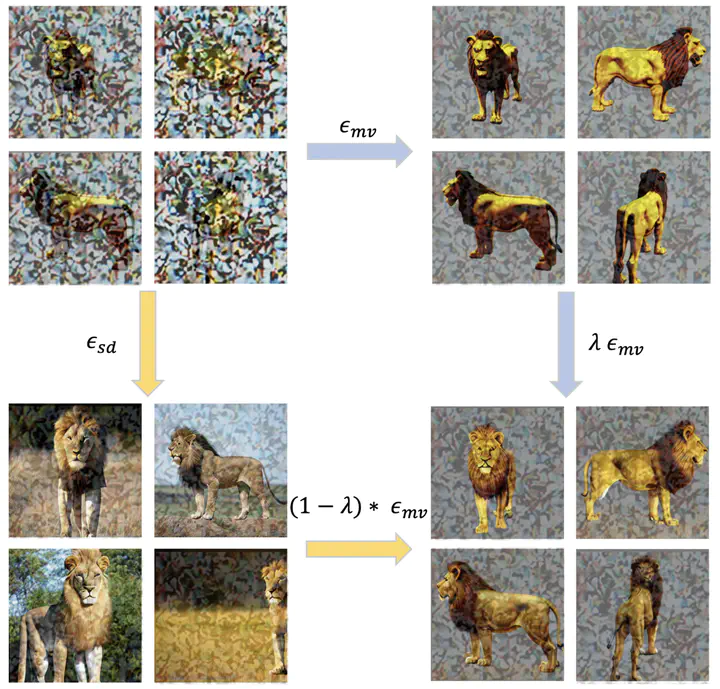

Joint Sample with 2D and Multi-view Diffusion

Joint Sample with 2D and Multi-view DiffusionWe propose a method that can generate multi-view images of objects from text prompts with high fidelity. There has been considerable progress in the field of 3D generation thanks to large synthetic 3D datasets such as Objaverse and the advancement of diffusion models. However, the generated multi-view images or 3D assets often suffer from simplistic textures, possibly because the textures of the objects in synthetic datasets are not as sophisticated as in- the-wild objects. This limits the practical usage of such models. On the other hand, text-to-2D methods are capable of generating images with high fidelity, partly due to the significantly larger and richer dataset they are trained on. We want to show that we can generate multi-view images with sophisticated textures by actively leveraging such a text-to-2D diffusion model in conjunction with a text-to-multi-view model while performing DDIM sampling.