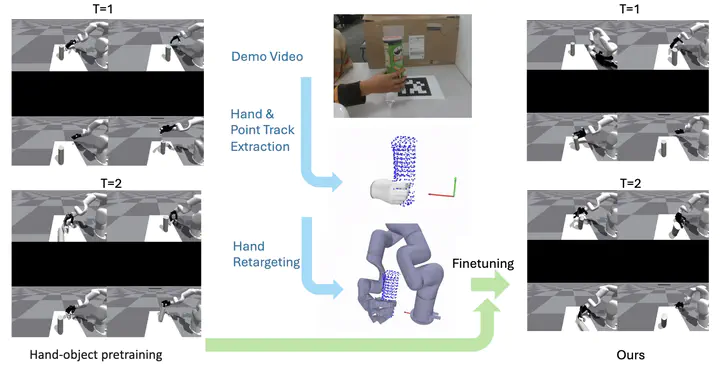

Visulization for Our Pipeline

Visulization for Our Pipelinewe explore monocular 3D point tracks as a representation for learning dexterous robot policies from human videos. 3D point tracking offers a robust and efficient intermediate representation for policy training in comparison to prior approaches using object mesh reconstruction. We validate our method in learning from a single video demonstration setup by fine-tuning the baseline on our dataset extracted from mesh-based representations and 3D point tracking approach. Our results highlight the potential of this representation to enhance policy learning from human videos.